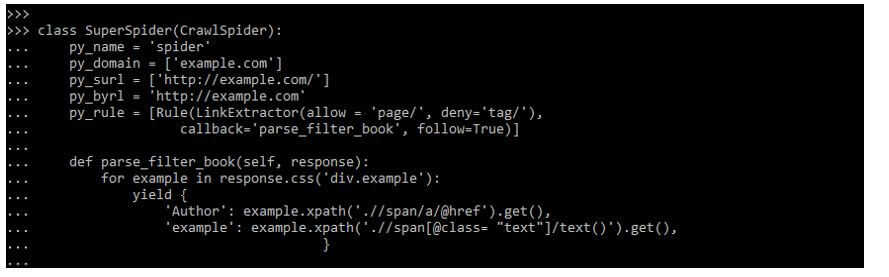

name, which is our Spider's name (that you can run using scrapy runspider spider_name).Here, we created our own EcomSpider class, based on scrap.Spider, and add three fields It's a simple container for our scraped data and Scrapy will look at this item's fields for many things like exporting the data to different format (JSON / CSV.), the item pipeline etc.įrom product_ems import ProductĪllowed_domains = With Scrapy you can return the scraped data as a simple Python dictionary, but it is a good idea to use the built-in Scrapy Item class. For each category you would need to handle pagination Then for each product the actual scraping that generate an Item so a third parse function.

This method would then yield a Request object to each product category to a new callback method parse2().

You could start by scraping the product categories, so this would be a first parse method. Let's say you want to scrape an E-commerce website that doesn't have any sitemap. You may wonder why the parse method can return so many different objects. The parse() method will then extract the data (in our case, the product price, image, description, title) and return either a dictionary, an Item or Request object, or an Iterable.It will then fetch the content of each URL and save the response in a Request object, which it will pass to parse().change the HTTP method/verb and use POST instead of GET or add authentication credentials) You can override start_requests() to customize this steps (e.g. It starts by using the URLs in the class' start_urls array as start URLs and passes them to start_requests() to initialize the request objects.Here are the different steps used by a Spider to scrape a website: With Scrapy, Spiders are classes where you define your crawling (what links / URLs need to be scraped) and scraping (what to extract) behavior. Scrapy doesn't execute any JavaScript by default, so if the website you are trying to scrape is using a frontend framework like Angular / React.js, you could have trouble accessing the data you want. In : response.css ( '.my-4 > span::text' ).get () scrapy.cfg is the configuration file for the project's main settings.įor our example, we will try to scrape a single product page from the following dummy e-commerce site.

#Scrapy extract all links how to#

With Scrapy, Spiders are classes that define how a website should be scraped, including what link to follow and how to extract the data for those links.

#Scrapy extract all links install#

Be careful though, the Scrapy documentation strongly suggests to install it in a dedicated virtual environment in order to avoid conflicts with your system packages. In this tutorial we will create two different web scrapers, a simple one that will extract data from an E-commerce product page, and a more "complex" one that will scrape an entire E-commerce catalog! Basic overview The downside of Scrapy is that the learning curve is steep, there is a lot to learn, but that is what we are here for :) The main difference between Scrapy and other commonly used libraries, such as Requests / BeautifulSoup, is that it is opinionated, meaning it comes with a set of rules and conventions, which allow you to solve the usual web scraping problems in an elegant way.

It handles the most common use cases when doing web scraping at scale: Scrapy is a wonderful open source Python web scraping framework. In this post we are going to dig a little bit deeper into it. In the previous post about Web Scraping with Python we talked a bit about Scrapy.

0 kommentar(er)

0 kommentar(er)